Generative AI and Large Language Models (LLMs) are often used interchangeably, but while they share some similarities, they differ significantly in purpose, architecture, and capabilities.

In this article, I'll break down the difference between the two, explore the broader implications of generative AI, and critically examine the challenges and limitations of both technologies.

What is Generative AI?

Generative AI refers to a class of AI systems designed to create new content, whether it's text, images, music, or even video, based on patterns learned from existing data.

How Generative AI Works

At its core, Generative AI functions by learning patterns from vast amounts of data, such as images, text, or sounds.

The process involves feeding the AI huge datasets, allowing it to "understand" these patterns deeply enough to recreate something similar but entirely original.

The "generative" aspect means the AI doesn’t just recognize or classify information; it produces something new from scratch. Here’s how:

1. Neural Networks

Generative AI uses neural networks, which are algorithms inspired by how the human brain works.

These networks consist of layers of artificial neurons, each responsible for processing data.

Neural networks can be trained to recognize patterns in data and then generate new data that follows those patterns.

2. Recurrent Neural Networks (RNNs)

For tasks that involve sequences, like generating text or music, Recurrent Neural Networks (RNNs) are often used.

RNNs are a type of neural network designed to process sequential data by keeping a sort of "memory" of what came before.

For example, when generating a sentence, RNNs remember the words that were previously generated, allowing them to craft coherent sentences rather than random strings of words.

3. Generative Adversarial Networks (GANs)

GANs work by pitting two neural networks against each other.

One network, the Generator, creates content (like an image), while the other network, the Discriminator, judges whether that content looks real or fake.

The Generator learns from the feedback of the Discriminator, gradually improving until it can produce content that’s indistinguishable from real data.

This method is particularly effective in generating high-quality images and videos.

Examples of Generative AI

- Image generators :

- DALL-E: It can generate highly detailed images from textual descriptions, demonstrating its ability to understand and translate language into visual form.

- Stable Diffusion: It allows users to generate a wide range of images, from realistic portraits to fantastical landscapes

- Music generators:

- Video tools:

- Runway: This versatile platform offers a suite of tools for video editing, animation, and generation. It can be used to create everything from simple animations to complex visual effects.

- Topaz Video AI: This software specializes in enhancing and restoring video footage, using AI to improve quality, reduce noise, and even increase resolution.

What Are Large Language Models (LLMs)?

Large Language Models (LLMs) are a specialized form of artificial intelligence designed to understand and generate human language with remarkable proficiency.

Unlike general generative AI, which can create a variety of content, LLMs focus specifically on processing and producing text, making them integral to tasks like translation, summarization, and conversational AI.

How LLMs Work

At their core, LLMs leverage Natural Language Processing (NLP), a branch of AI dedicated to understanding and interpreting human language. The process begins with tokenization:

Tokenization

This involves breaking down a sentence into smaller units, typically words or subwords. They are called tokens in LLM terms.

For instance, the sentence "I love AI" might be tokenized as ["I", "love", "AI"]. These tokens serve as the building blocks for the model's understanding.

Transformers

LLMs typically use an architecture called transformers, a model that revolutionized natural language processing.

They work by analyzing relationships between words and their contexts in massive datasets.

In simple terms, think of them as supercharged auto-complete functions capable of writing essays, answering complex questions, or summarizing articles.

Examples of LLM's

- Text Generation:

- GPT 3: One of the most well-known LLMs. It is capable of generating human-like texts, from writing essays to creating poetry.

- GPT-4: It is more advance successor and further improved like having memory which allows it to maintain and access information from previous conversations.

- Gemini: A notable LLM by Google, which focuses on enhancing text generation and understanding.

Generative AI and LLMs: A unique Bond

Now that you’re familiar with the basics of generative AI and large language models (LLMs), Let's explore the transformative potential when these technologies are combined.

Here are some ideas:

Content Creation

To all the writer folks like me that might have met with a writers block, the combination of LLMs and generative AI enables the creation of unique and contextually relevant content across various media text, images, and even music.

Talk to your documents

A fascinating real-world use case is how businesses and individuals can now scan documents and interact with them.

You could ask specific questions about the content, generate summaries, or request further insights without compromising privacy.

This approach is particularly valuable in fields where data confidentiality is crucial, such as law, healthcare, or education.

We have covered one such project called PrivateGPT.

Enhanced Chatbots and Virtual Assistants

No one likes the generic response of customer service chatbots. The combination of LLMs and generative AI can power advanced chatbots that handle complex queries more naturally.

For instance, an LLM might help a virtual assistant understand a customer’s needs, while generative AI crafts detailed and engaging responses.

Open-source projects like Rasa, a customizable chatbot framework, have made this technology accessible for businesses looking for privacy and flexibility.

Improved Translation and Localization

When combined, LLMs and generative AI can significantly improve translation accuracy and cultural sensitivity.

For example, an LLM could handle the linguistic nuances of a language like Arabic, while generative AI produces culturally relevant images or content for the same audience.

Open-source projects like Marian NMT and Unlabel Tower, a translation toolkit and LLM, show promise in this area.

Still, challenges remain especially in dealing with idiomatic expressions or regional dialects, where AI can stumble.

Challenges and Limitations

Both Generative AI and LLMs face significant challenges, many of which raise concerns about their real-world applications:

Bias

Generative AI and LLMs learn from the data they are trained on. If the training data contains biases (e.g., discriminatory language or stereotypes), the AI will reflect those biases in its output.

This issue is especially problematic for LLMs, which generate text based on internet-sourced data, much of which contains inherent biases.

Hallucinations

A unique problem for LLMs is "hallucination," where the model generates false or nonsensical information with unwarranted confidence.

While generative AI might create something visually incoherent that’s easy to detect (like a distorted image).

But an LLM might subtly present incorrect information in a way that appears entirely plausible, making it harder to spot.

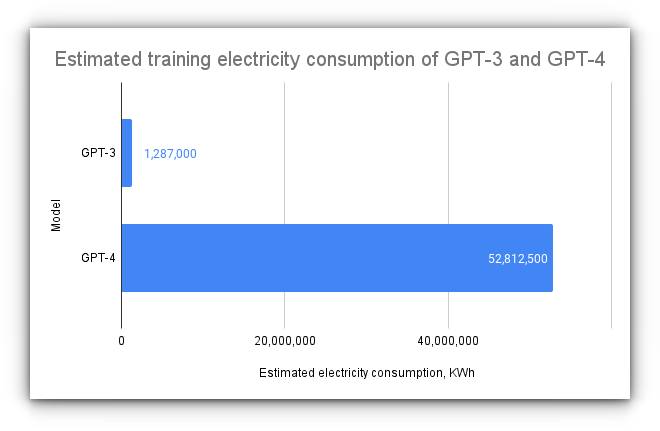

Resource Intensiveness

Training both generative AI and LLMs requires vast computational resources. It’s not just about processing power, but also storage and energy.

This raises concerns about the environmental impact of large-scale AI training.

Ethical Concerns

The ability of generative AI to produce near-perfect imitations of images, voices, and even personalities poses ethical questions.

How do we differentiate between AI-generated and human-made content? With LLMs, the question becomes: how do we prevent the spread of misinformation or misuse of AI for malicious purposes?

Key Takeaways

The way generative AI and LLMs complement each other is mind-blowing whether it’s generating vivid imagery from simple text or creating human-like conversations, the possibilities seem endless.

However, one of my biggest concerns is that companies are training their models on user data without explicit permission.

This practice raises serious privacy issues, if everything we do online is being fed into AI, what’s left that’s truly personal or private? It feels like we’re inching closer to a world where data ownership becomes a relic of the past.