If you ask me, Microsoft has been one of the biggest driving forces behind Linux adoption in recent years. The way they've been handling Windows, with its forced updates, aggressive telemetry, and questionable AI features, has sent more people to Linux than any marketing campaign ever could.

And they are at it again with a new AI feature that could be tricked into installing malware on your system.

Isn't This Too Much?

Microsoft is rolling out a new experimental feature called "Copilot Actions" to Windows Insiders. They pitch it as an AI agent that handles tasks you describe to it. Organize vacation photos, sort your Downloads folder, extract info from PDFs, that sort of thing.

It is currently available in Windows 11 Insider builds (version 26220.7262) as part of Copilot Labs and is off by default, requiring admin access to set it up.

But here's the catch. Copilot Actions isn't just suggesting what to do. It runs in a separate environment called "Agent Workspace" with its unique user account, clicking through apps and working on your files.

Microsoft says it has "capabilities like its own desktop" and can "interact with apps in parallel to your own session." And that's where the problems start.

In a support document (linked above), Microsoft admits that features like Copilot Actions introduce "novel security risks." They warn about cross-prompt injection (XPIA), where malicious content in documents or UI elements can override the AI's instructions.

The result? "Unintended actions like data exfiltration or malware installation."

Yeah, you read that right. Microsoft is shipping a feature that could be tricked into installing malware on your system.

Microsoft's own warning hits hard: "We recommend that you only enable this feature if you understand the security implications."

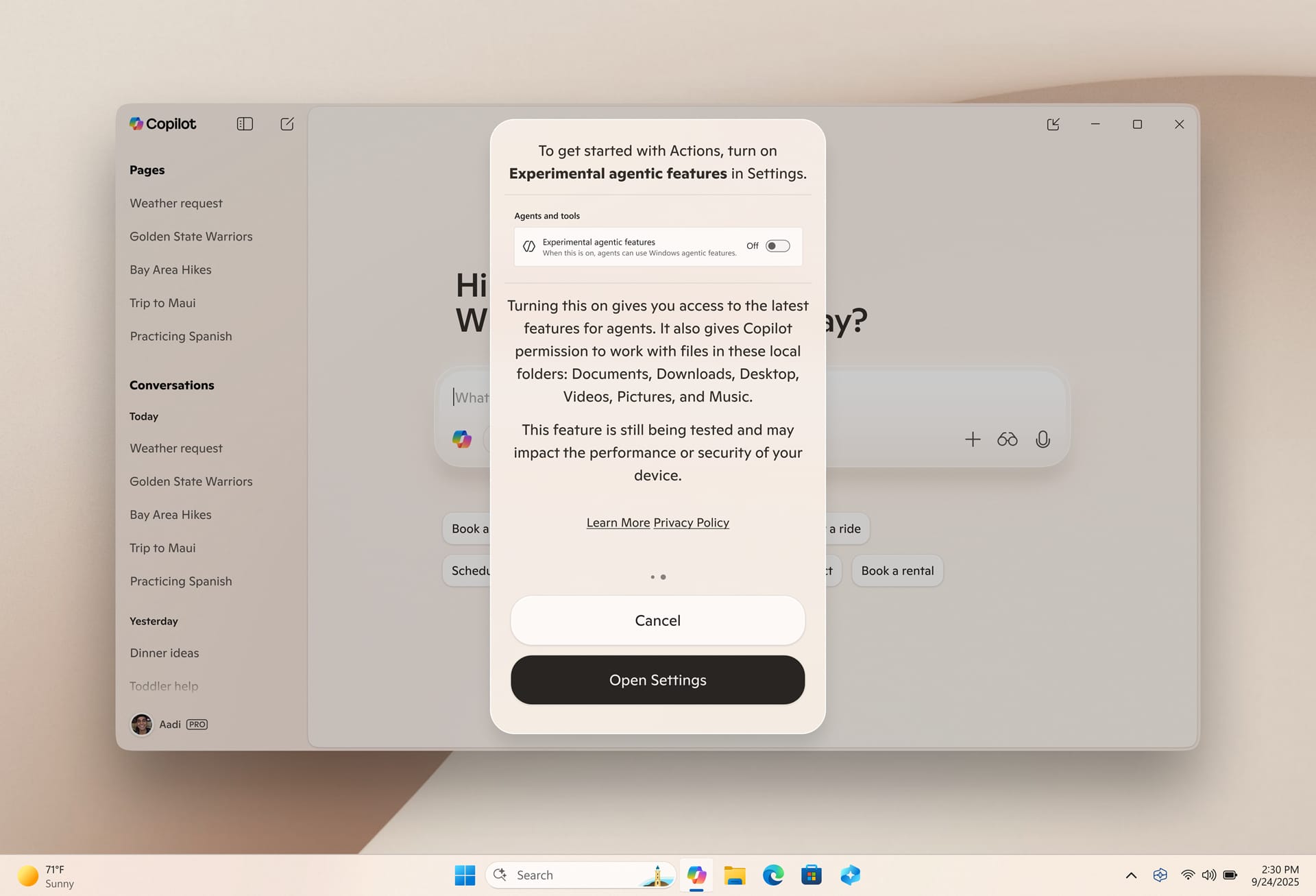

When you try to enable these experimental features, Windows shows you a warning dialog that you have to acknowledge. 👇

Even with these warnings, the level of access Copilot Actions demands is concerning. When you enable the feature, it gets read and write access to your Documents, Downloads, Desktop, Pictures, Videos, and Music folders.

Windows Latest notes that, unlike Windows Sandbox, which runs in complete isolation and gets wiped clean when you close it, Copilot Actions operates in "Agent Workspace" with persistent user accounts that keep access to these folders across sessions. Also keep in mind that the feature can also access any apps installed for all users on a system.

Microsoft says they are implementing safeguards. All actions are logged, users must approve data access requests, the feature operates in isolated workspaces, and the system uses audit logs to track activity.

But you are still giving an AI system that can "hallucinate and produce unexpected outputs" (Microsoft's words, not mine) full access to your personal files.

Closing Thoughts

There is a pattern here. Microsoft seems obsessed with shoving AI into every corner of Windows, whether users want it or not, whether it's ready or not, while simultaneously playing around with the data of its users.

This is why Linux keeps gaining traction. No AI experiments that could install malware, and no fighting against features you never asked for. Plus, the most likely way you will nuke your installation is if you deliberately run something like rm -rf yourself, not because Copilot got confused by a malicious PDF.

If Microsoft's AI experiments are making you uncomfortable, then there are plenty of Linux distributions that respect your privacy and put you in control.

Suggested Reads 📖