IBM has been steadily building its open source AI presence with the Granite model family. These enterprise-focused language models prioritize safety, transparency, and practical deployment over chasing the biggest parameter counts.

They recently launched Granite 4.0, which introduces a hybrid architecture that's designed to be more efficient. The goal? Smaller models that can compete with much larger ones.

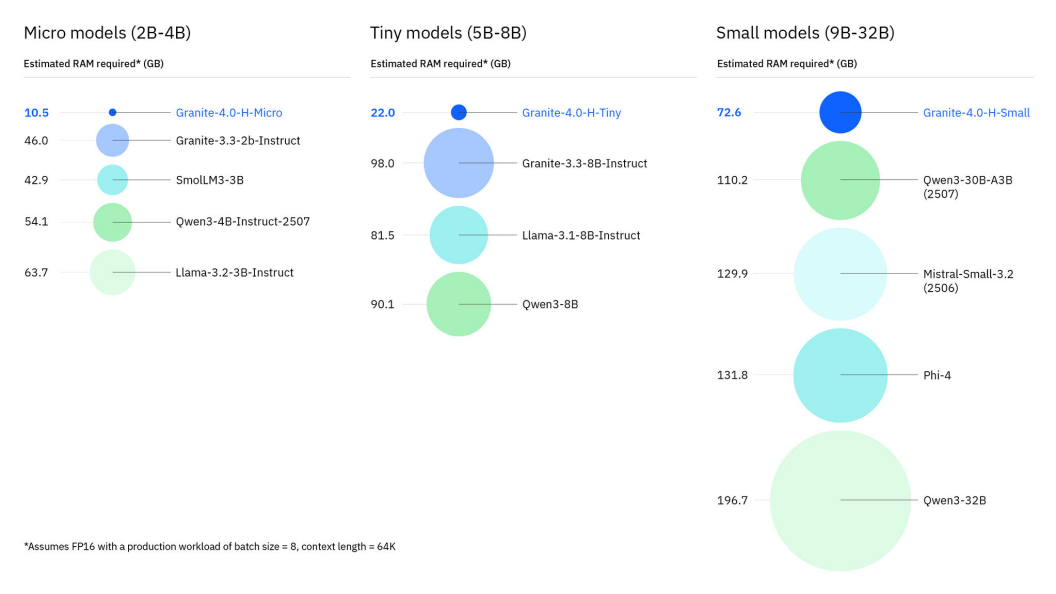

The results are impressive. The smallest Granite 4.0 models outperform the previous Granite 3.3 8B despite being less than half its size.

Granite 4.0: What Does it Offer?

Developed as an open source model family under the Apache 2.0 License, Granite 4.0 is IBM's latest attempt at making AI more accessible and cost-effective for enterprises.

It is powered by a hybrid architecture that combines two technologies.

The first is Mamba-2, which processes sequences linearly and uses constant memory regardless of context length. The second is the traditional transformer, which handles the nuanced local context through self-attention.

By combining them in a 9:1 ratio (90% Mamba-2, 10% transformers), IBM managed to reduce RAM requirements by over 70% compared to conventional models.

To showcase its ability to perform, IBM tested Granite 4.0 extensively. On the IFEval benchmark for instruction-following, Granite 4.0-H-Small scored 0.89, beating all open-weight models except Llama 4 Maverick, which is 12 times larger.

They also partnered with EY and Lockheed Martin for early testing at scale. The feedback from these enterprise partners will help improve future updates.

Granite 4.0 comes in three sizes: Micro, Tiny, and Small. Each is available in 1 and Instruct variants.

The Small model is the workhorse for enterprise workflows like multi-tool agents and customer support automation. Meanwhile, Micro and Tiny are designed for edge devices and low-latency applications, and there are plans to release even smaller Nano variants by year-end.

Want To Check It Out?

Granite 4.0 is available through multiple platforms.

You can try it for free on the Granite Playground. For production deployment, the models are available on IBM watsonx.ai, Hugging Face, Docker Hub, Ollama, NVIDIA NIM, LM Studio, Kaggle, Replicate, and Dell Technologies platforms.

You can find guides and tutorials in the Granite Docs to get started.

Suggested Read 📖