Last year in May, the cURL project's bug bounty program was inundated with AI slop, where many bogus reports were opened on HackerOne, leaving the cURL maintainers to go through garbage.

The problem didn't stop even after Daniel Stenberg, the creator of cURL, threatened to ban anyone whose bug report was found to be AI slop. We are now in 2026, and the situation has reached a tipping point.

cURL Says Enough is Enough

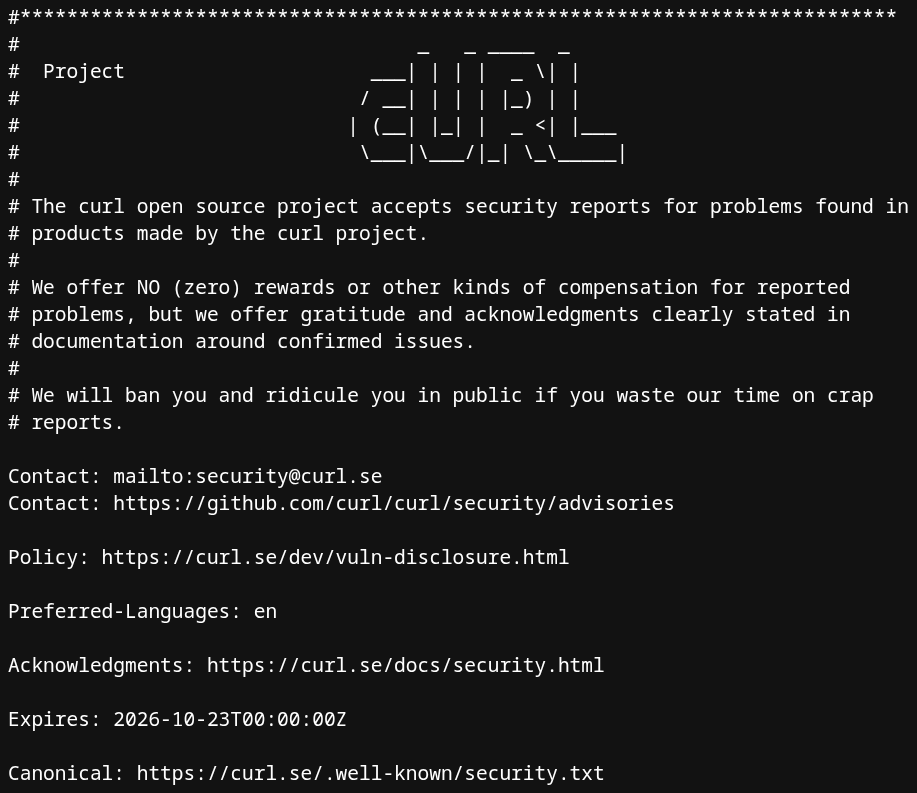

Daniel has submitted a pull request on GitHub that removes all mentions of the bug bounty program from cURL's documentation and website. Coinciding with that, the project's security.txt file has been updated with some blunt language that makes the new policy crystal clear.

The cURL team intends to make a proper announcement in the coming days, though many outlets have already covered the news of this happening, so I would say they ought to get on it ASAP! 😆

The program officially ends in a few days on January 31, 2026. After that, security researchers can still report issues through GitHub or the project's mailing list, but there won't be any cash involved.

What pushed them over the edge?, you ask. Well, just weeks into 2026, seven HackerOne reports came in within a 16-hour period in just one week. Some were actual bugs, but none of them were security vulnerabilities. By the time Daniel posted his recent weekly report, they'd already dealt with 20 submissions in 2026.

The main goal here is said to be stopping the flood of garbage reports. By eliminating the money incentive, they are hoping people (or bots?) will stop wasting the security team's time with half-baked, unresearched submissions.

He also gives a stern warning to wannabe AI sloppers, saying that:

This is a balance of course, but I also continue to believe that exposing, discussing and ridiculing the ones who waste our time is one of the better ways to get the message through: you should NEVER report a bug or a vulnerability unless you actually understand it - and can reproduce it. If you still do, I believe I am in the right to make fun of - and be angry at - the person doing it.

So, yeah, that's that. If people still don't understand that AI slop is harmful to such sensitive pieces of software, then sure, they can go ahead and make a fool of themselves.

Suggested Read 📖: Open Source Project LLVM Says Yes to AI-Generated Code